You’ve probably heard of caching before, but do you know just how much it can improve the performance of your website or application? In this article, we will take a closer look at advanced caching techniques and explore how they can be effectively utilized to optimize the speed and responsiveness of your digital solutions. So if you’re ready to dive into the world of caching and unlock the potential of your online presence, this is the article for you.

Basics of Caching

Definition of caching

Caching is a technique used in computer science to improve the performance and efficiency of systems by storing frequently accessed data in a faster and more easily retrievable location. It involves temporarily storing copies of data or computations in order to reduce the time and resources required for subsequent access or calculations. By keeping this data closer to the user or application, caching helps to minimize latency and improve overall system responsiveness.

How caching works

Caching works by creating a cache, which is essentially a temporary storage space that holds copies of data or computations. When a user or application requests data, the caching system first checks if the data is already stored in the cache. If the data is present, it is retrieved from the cache, resulting in a significantly faster response time. If the data is not in the cache, the system retrieves it from the original source, stores a copy in the cache, and then returns it to the requester. This way, subsequent requests for the same data can be served directly from the cache, eliminating the need to access the original source and reducing the overall load on the system.

Benefits of caching

Caching offers several benefits, making it an essential technique in optimizing system performance:

-

Improved response time: By storing frequently accessed data closer to the user or application, caching reduces the time required to retrieve the data, resulting in faster response times.

-

Reduced resource usage: Caching reduces the load on the original data source or system by serving data directly from the cache. This reduces bandwidth, processing power, and overall resource consumption.

-

Increased scalability: Caching improves system scalability by reducing the load on backend resources. By serving frequently accessed data from the cache, the system can handle more concurrent users or requests without affecting performance.

-

Enhanced user experience: Faster response times and reduced latency lead to a better user experience, particularly for applications that involve real-time interactions or content delivery.

-

Cost savings: Caching can significantly reduce infrastructure costs by minimizing the need for expensive hardware or bandwidth upgrades. It allows systems to handle higher loads while maintaining acceptable performance levels.

-

Improved fault tolerance: Caching can provide a level of fault tolerance by serving cached data even if the original data source or system is temporarily unavailable. This can help maintain service continuity and prevent disruptions for users or applications.

Types of Caching

Page caching

Page caching involves caching the entire HTML content of a web page. It is typically used for static pages or pages with minimal dynamic content. When a user requests a web page, the cached HTML is served directly from the cache, bypassing the need for server-side processing or database queries. This significantly improves response times and reduces the load on the web server.

Database caching

Database caching involves caching the results of database queries or data retrieval operations. By storing frequently accessed data in memory, subsequent requests for the same data can be served directly from the cache, eliminating the need to access the database. This reduces database load and improves overall system performance.

Object caching

Object caching involves caching specific objects or data structures in memory. This is commonly used in applications that rely on object-oriented programming, where frequently used objects are stored in a cache for quick access. Object caching can significantly improve application performance, particularly for complex operations that involve object creation or manipulation.

Opcode caching

Opcode caching is specific to programming languages that use bytecode or intermediate code. It involves caching the compiled bytecode of scripts or programs to avoid the overhead of repetitive compilation. By storing the precompiled code in memory, the system can skip the compilation step for subsequent requests, resulting in faster execution times.

Key Concepts in Caching

Cache hit and miss

In caching, a cache hit refers to a successful retrieval of data from the cache. When a user or application requests data, and it is found in the cache, it is considered a cache hit. This results in faster response times and reduces the load on backend resources. On the other hand, a cache miss occurs when the requested data is not found in the cache. In this case, the system needs to retrieve the data from the original source, resulting in increased latency and resource consumption.

Cache expiration

Cache expiration refers to the time limit set for data stored in the cache. When data reaches its expiration time, it is considered invalid, and the system must retrieve the updated data from the original source. Setting an appropriate cache expiration time is crucial to ensure data freshness and prevent serving stale or outdated information to users or applications.

Cache invalidation

Cache invalidation refers to the process of removing or updating cached data when it becomes obsolete or no longer reflects the latest state. This is particularly important for dynamic or frequently changing data. Cache invalidation can be triggered manually by a system administrator or through automated mechanisms such as time-based expiration or event-based invalidation.

Cache Storage Mechanisms

In-memory caching

In-memory caching involves storing cached data in the server’s memory or RAM. This allows for extremely fast access and retrieval times, as accessing data from memory is significantly faster than retrieving it from disk or a remote location. In-memory caching is ideal for frequently accessed data or data that needs to be processed quickly.

Disk-based caching

Disk-based caching involves storing cached data on the server’s hard disk or solid-state drive (SSD). While disk-based caching may not offer the same level of performance as in-memory caching, it provides a larger storage capacity and can handle a wider range of data sizes. Disk-based caching is suitable for less frequently accessed data or data that does not require immediate retrieval.

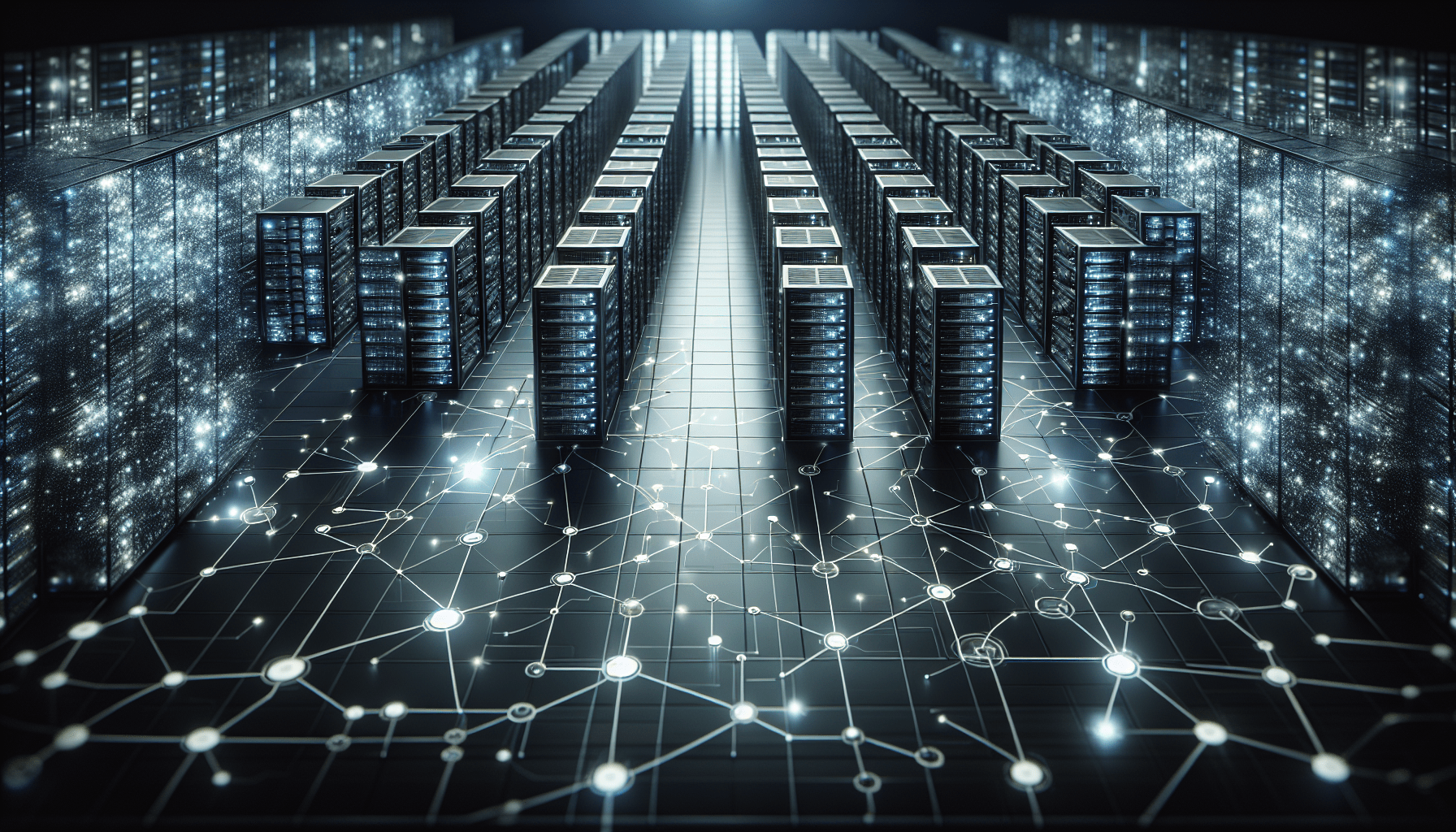

Distributed caching

Distributed caching involves spreading the cached data across multiple servers or nodes within a network. This allows for increased storage capacity, improved fault tolerance, and scalability. Distributed caching systems often use algorithms to determine which node should store or retrieve data, optimizing data distribution and retrieval times.

Cache Configurations

Cache size and capacity

The cache size and capacity refer to the maximum amount of data that can be stored in the cache. Determining the appropriate cache size requires considering factors such as available memory or disk space, expected data volume, and the system’s performance requirements. It is important to strike a balance between having enough cache space to store frequently accessed data and not consuming excessive resources.

Cache eviction policies

Cache eviction policies determine how the cache handles data when it reaches its maximum capacity. There are various eviction policies available, such as least recently used (LRU), least frequently used (LFU), and random eviction. These policies determine which data should be removed from the cache to accommodate new data. Choosing the right eviction policy depends on factors such as data access patterns and the importance of data freshness.

Cache partitioning

Cache partitioning involves dividing the cache into smaller subsets or partitions. Each partition is responsible for storing a specific portion of the cached data. This allows for efficient retrieval and distribution of data across multiple nodes or servers in a distributed caching setup. Cache partitioning can improve system scalability and performance, particularly in high traffic or large-scale applications.

Caching Strategies

Full page caching

Full page caching involves caching the entire HTML content of a web page, including the static and dynamic components. This is suitable for pages with minimal or no user-specific content. By serving the entire page from the cache, full page caching eliminates the need for server-side processing or database queries, resulting in significantly faster response times and reduced server load.

Partial page caching

Partial page caching involves caching specific sections or components of a web page that are frequently accessed or computationally expensive. This is useful for pages with dynamic content that requires server-side processing. By caching only the relevant parts of the page, partial page caching reduces the time and resources required for data retrieval or computation while still providing a dynamic user experience.

Fragment caching

Fragment caching involves caching smaller sections or fragments of a web page, such as a navigation menu, sidebar, or user-specific content. This allows for the selective caching of specific page elements that are shared across multiple pages or accessed frequently. Fragment caching can significantly improve performance by reducing the need for redundant computations or data retrieval for each page request.

Optimizing Cache Performance

Cache hierarchy

Cache hierarchy refers to the organization of multiple cache levels, each with varying storage capacity and access speeds. This hierarchical structure allows for efficient data retrieval based on access patterns and data importance. Frequently accessed or critical data can be stored in faster and smaller caches closer to the user or application, while less frequently accessed or less critical data can be stored in larger but slower caches.

Cache warming

Cache warming involves preloading the cache with frequently accessed or time-intensive computations before they are requested. By proactively populating the cache, cache warming reduces the likelihood of cache misses and improves overall system performance. This can be achieved through scheduled tasks or by analyzing access patterns to identify the most commonly accessed data.

Using compression

Using compression techniques can optimize cache performance by reducing the storage space required for cached data. Compressed data takes up less space in memory or on disk, allowing for more data to be stored in the cache. Additionally, compressed data can be transmitted faster over the network, leading to improved response times for remote caches. However, it is important to strike a balance between compression ratios and the overhead of decompression to ensure optimal performance.

Cache Monitoring and Debugging

Logging cache activities

Logging cache activities involves capturing and analyzing log data related to cache operations. This includes information such as cache hits, cache misses, cache evictions, and cache expiration. By monitoring cache activities, system administrators and developers can gain insights into cache performance, identify bottlenecks or issues, and tune cache configurations for optimal performance.

Analyzing cache performance

Analyzing cache performance involves gathering and analyzing performance metrics to assess the effectiveness of caching strategies. This can be done through tools or frameworks that provide detailed statistics on cache hit rates, response times, data freshness, and resource utilization. By analyzing cache performance, system administrators can make informed decisions on cache configurations, eviction policies, and cache sizing to maximize performance.

Troubleshooting cache issues

Troubleshooting cache issues involves identifying and resolving problems or errors related to caching. This can include investigating cache inconsistencies, cache coherence issues, or cache configuration errors. Troubleshooting cache issues often requires a thorough understanding of the caching system’s architecture, cache configurations, and underlying technologies. It may involve debugging code, tuning cache parameters, or analyzing system logs to pinpoint and address the root cause of the issue.

Cache Security

Securing cached data

Securing cached data involves implementing measures to protect sensitive or confidential information stored in the cache. This can include encryption, access control mechanisms, and data obfuscation techniques. By securing cached data, systems can prevent unauthorized access or data leakage, ensuring the privacy and integrity of sensitive information.

Preventing cache poisoning

Cache poisoning refers to a security vulnerability where an attacker injects malicious or fake data into the cache, potentially leading to incorrect or compromised results. Preventing cache poisoning requires implementing security measures such as input validation, data integrity checks, and proper cache invalidation mechanisms. Regular monitoring and auditing of the cache can also help detect and mitigate cache poisoning attacks.

Mitigating cache-based attacks

Cache-based attacks exploit vulnerabilities in the caching system to launch malicious activities such as data exfiltration, denial of service (DoS), or injection attacks. Mitigating cache-based attacks requires implementing security measures such as access controls, rate limiting, and input sanitization. It is also important to regularly update and patch the caching system to address any known security vulnerabilities.

Integration with Content Delivery Networks (CDNs)

Benefits of CDN integration

Integrating a caching system with a Content Delivery Network (CDN) offers several benefits. CDNs are geographically distributed networks of servers that cache and deliver content to users based on their location, resulting in faster content delivery and reduced network latency. By leveraging CDNs, caching systems can offload the delivery of static or cacheable content, improve scalability, and enhance global performance for users across different regions.

Configuring CDN caching

Configuring CDN caching involves setting up caching rules and policies within the CDN infrastructure to determine what content should be cached, how it should be cached, and for how long. By properly configuring CDN caching, organizations can optimize cache hit rates, control cache expiration, and improve overall content delivery performance. This may involve specifying cache control headers, setting cache keys, or defining caching directives.

Cache invalidation with CDNs

Cache invalidation with CDNs refers to the process of updating or purging cached content stored in the CDN. When the original content changes, it is important to ensure that the updated version is served to users. CDN cache invalidation mechanisms allow organizations to selectively invalidate or refresh cached content, ensuring that users receive the most up-to-date information. This can be achieved through manual purging or through automated processes triggered by content updates or time-based expiration.